Citation preview

Fortran Program For Secant Method Numerical Notation

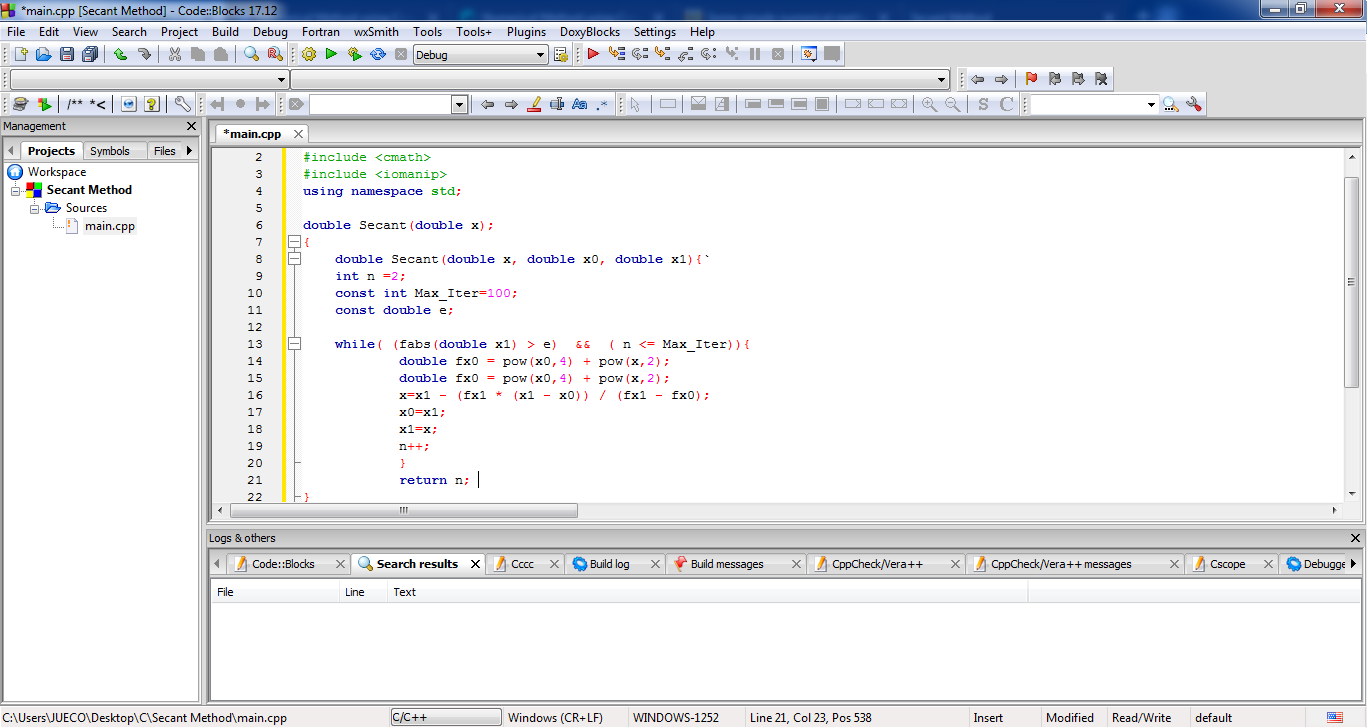

'02 Secant Method, False Position Method, and Ridders' Method' Not Started '03 Van Wijngaarden-Dekker-Brent Method' Not Started '04 Newton-Raphson Method Using Derivatives' Not Started '05 Roots of Polynomials' Not Started '06 Newton-Raphson Method for Nonlinear Systems of Equations' Not Started. It is clear from the numerical results that the secant method requires more iterates than the Newton method (e.g., with Newton’s method, the iterate x 6 is accurate to the machine precision of around 16 decimal digits). But note that the secant method does not require a knowledge of f0(x), whereas Newton’s method requires both f(x) and f0(x).

.

tt- '

M K Jain S R K Iyengar R K Jain

ethods for ntifi

HALSTED

BOOK

1

..vmrnmmm

Numerical Methods for Scientific and Engineering Computation

is appropriate as a text book for the first course and partly for the second course in numerical analysis. The book is largely self-contained, the courses in calculus and matrices are essential. Some of the special features of the book are: classical and recently developed numerical methods are derived from the high speed computation view point; comparative study of the numerical methods is given to bring out advantages and disadvantages in the implementation of the methods; about 300 problems including BIT problems (1964 83) are listed at the end of Chapters 2-7, to serve as exercises and extension to the text; answers and hints to the problems at the end of the book as well as the solved examples in the body of the text will help the students to understand the basic concepts. Contents: High Speed Computation; Transcendental and Polynomial Equations; System of Linear Algebraic Equations and Eigenvalue Problems; Interpolation and Approximation; Differentiation and Integration; Ordinary Differential Equations; Partial Differential Equations.

BOSTON PUBLIC LIBRARY

Digitized by the Internet Archive in 2017 with funding from Kahle/Austin Foundation

https://archive.org/details/numericalmethodsOOjain_O

Numerical Methods for Scientific and Engineering Computation

M. K. JAIN S. R. K. IYENGAR R. K. JAIN Department of Mathematics Indian Institute of Technology Delhi India

A

HALSTED

PRESS

BOOK

JOHN WILEY & SONS New York

Chichester

Brisbane

Toronto

Singapore

Copyright © 1985 WILEY EASTERN LIMITED NEW DELHI

Published in the Western Hemisphere by Halsted Press, A Division of John Wiley & Sons, Inc., New York

Library of Congress Cataloging in Publication Data Jain, M. K. (Mahinder Kumar), 1932Numerical methods for scientific and engineering computation. “A Halsted Press book.” Bibliography: P. Includes index. 1. Numerical analysis. I. Iyenger, S. R. K. II. Jain, Rajendra K., 1951III. Title. QA297. 328 1984 519.4 84-22024 ISBN

0-470-20143-6

Printed in India at Urvashi Press, Meerut.

Preface This book has grown from the lectures which we and our colleagues in the Department of Mathematics have delivered at the Indian Institute of Technology Delhi. Both undergraduate and postgraduate students of various engineering disciplines and M.Sc. mathematics (1st and 2nd year) students have attended these lectures. The material has also been covered in various summer schools organised by the Department of Mathematics at this Institute. Further, the book is based on the curriculum recommended by the workshop of the Engineering College teachers held at I IT Delhi, sponsored by the Ministry of Education, Government of India. Also, the book has a number of BIT problems which have appeared in the examinations of Course I and partly Course II of Numerical Analysis at the Universities and Institutes of Technology in the Scandinavian countries for the years 1964-1983. Evidently, the book will meet the requirements of the students taking a first course in Numerical Analysis at most of the international universities and Institutes of Technology. The book has seven chapters. Chapter 1 provides an introduction to computer arithmetic, errors and machine computation. In Chapter 2, the direct and iterative methods for finding the roots of transcendental and polynomial equations are given. A brief section on the choice of an iterative method is discussed. Chapter 3 contains the direct and iterative methods for the solution of a system of linear algebraic equations. The error analysis and convergence of iterative methods are also given. Various methods for finding eigenvalues and eigenvectors are included. Chapter 4 gives the derivation of interpolating polynomials and approximating functions. At the end, a section on the choice of a method is given. Chapter 5 provides methods for numerical differentiation and integration. Extrapolation procedures are discussed in detail. Chapters 6 and 7 have been adapted from the text entitled Numerical Solution of Differential Equations, 2nd edition, written by one of the present authors, published by Wiley Eastern Ltd., 1983. Chapter 6 includes a detailed treatment of single step and multistep methods for solving first order initial value problems, fhe difference and shooting methods for solving two point second order boundary value problems are discussed. Chapter 7 contains numerical methods for the solution of parabolic, hyperbolic and elliptic partial differential equations. Chapters 2-7 are followed by problems, including BIT problems. A number of examples have been solved in each chapter to enable the student to understand the concepts described in the text. Flowcharts of the

iv

Preface

frequently used numerical methods for computer program are given in Appendix 1. Answers to the problems are also listed at the end of the book. We wish to express our sincere thanks to Prof. C. E. Froberg for allowing the use of BIT problems. Thanks are due to Mr. D. R. Joshi and Miss Neelam Dhody for typing the manuscript and to Mr. Ranjit Kumar for his assistance. Finally, we are thankful to the authorities of the Indian Institute of Technology, Delhi for providing necessary facilities and encouragement. New Delhi October, 1984

M. K. JAIN S. R. K. IYENGAR R. K. JAIN

Contents iii

Preface

CHAPTER 1.

High Speed Computation

1.1 1.2

1

Introduction 1 Computer Arithmetic

1

Binary number system Octal and hexadecimal system Floating-point arithmetic

1.3

Errors

7

Significant digits and numerical instability

1.4 1.5 CHAPTER 2.

Machine Computation 12 Computer Software 16

Transcendental and Polynomial Equations

2.1

Introduction

18

Initial approximations

2.2 2.3

The Bisection Method 22 Iteration Methods Based on First Degree Equation 23 Secant method The Newton-Raphson method

2.4

Iteration Methods Based on Second Degree Equation 27 The Muller method The Chebyshev method Multipoint iteration method

2.5

Rate of Convergence

32

Secant method The Newton-Raphson method

2.6

Iteration Methods

34

First order method Second order method High order methods Acceleration of convergence Efficiency of a method Methods for multiple roots Methods for complex roots

2.7

Polynomial Equations

43

18

vi

Contents

The Birge-Vieta method The Bairstow method Graeffe’s root squaring method

2.8

Choice of an Iterative Method and Implementation 56 Flowchart for a zero finder Problems

CHAPTER 3.

System of Linear Algebraic Equations and Eigenvalue Problems 3.1

Introduction

69

3.2

Direct Methods

72

Cramer rule Gauss elimination method Gauss-Jordan elimination method Triangularization method Cholesky method Partition method

3.3

Error Analysis

88

Iterative improvement of the solution

3.4

Iteration Methods

92

Jacobi iteration method Gauss-Seidel iteration method Successive over-relaxation method Convergence analysis Iterative method for A~x

3.5

Eigenvalues and Eigenvectors

102

Jacobi method for symmetric matrices Given’s method for symmetric matrices Householder’s method for symmetric matrices Rutishauser method f or arbitrary matrices Power method

3.6

Choice of a Method

121

Problems

CHAPTER 4.

Interpolation and Approximation 4.1

Introduction

135

4.2

Lagrange and 'Newton Interpolations

137

Linear interpolation Higher order interpolation

4.3 4.4

Finite Difference Operators 145 Interpolating Polynomials Using Finite Differences 148

4.5

Hermite Interpolation

4.6 4.7

Piecewise and Spline Interpolation Bivariate Interpolation 162

153 155

Lagrange bivariate interpolation Newton’s bivariate interpolation for equispaced points

Contents

4.8 Approximation 164 4.9 Least Squares Approximation 4.10 Uniform Approximation 174

vii

166

Uniform (minimax) polynomial approximation Chebyshev polynomial approximation and Lanczos economization

4.11 Rational Approximation 181 4.12 Choice of a Method 183 Problems

CHAPTER 5.

Differentiation and Integration 5.1 5.2

Introduction 196 Numerical Differentiation

196 196

Methods based on interpolation Methods based on finite differences Methods based on undetermined coefficients

5.3 5.4

Optimum Choice of Step-length Extrapolation Methods 208

205

5.5

Partial Differentiation

5.6 5.7 5.8

Numerical Integration 213 Methods Based on Interpolation 214 Methods Based on Undetermined Coefficients 219

211

Gauss-Legendre integration method Lobatto integration method R.adau integration method Gauss-Chebyshev integration methods Gauss-Laguerre integration methods Gauss-Hermite integration methods

5.9

Composite Integration Methods

227

Trapezoidal rule Simpson’s rule

5.10 Romberg Integration 231 5.11 Double Integration 234 Trapezoidal method Simpson’s method Problems

CHAPTER 6.

Ordinary Differential Equations 6.1

Introduction

243

6.2

Numerical Methods

247

Euler method Backward Euler method Mid-point method

6.3

Single Step Methods Taylor series method

258

243

viii

Contents

Runge-Kutta methods Implicit Runge-Kutta methods

6.4

Multistep Methods

270

Determination of ai and b: Convergence of multistep methods Predictor-corrector methods

6.5

Stability Analysis

280

Singlestep methods Multistep methods

6.6

Boundary Value Problems

289

Difference methods Boundary value problem u' = f(x, u) Convergence of difference schemes Shooting method Problems

CHAPTER 7.

Partial Differentia! Equations 7.1 7.2

Introduction 314 Difference Methods

318

7.3

Parabolic Equations

319

314

One space dimension Convergence and stability analysis Two space dimensions

7.4

Hyperbolic Equations

341

One space dimension Two space dimensions First order equation System of equations

7.5

Elliptic Equations

359

Dirichlet problem Neumann problem Mixed problem Problems

Answers and Hints to the Problems

385

Index

403

CHAPTER 1

High Speed Computation 1.1

INTRODUCTION

With the advent of the modern high speed electronic digital computers, the numerical methods have been successfully applied to study problems in mathematics, engineering, computer science and physical sciences such as biophysics, physics, atmospheric sciences and geosciences. The art and science of preparing and solving scientific and engineering problems have undergone considerable changes. This is due to the following two reasons: (i) The mathematical problem is to be reduced to a form amenable to machine solution. (ii) Several million operations are performed per minute on a high speed computer. This makes difficult to check the intermediate results for possible build up of large errors during calculations. In what follows, we examine these two aspects in detail. 1.2

COMPUTER ARITHMETIC

The basic arithmetic operations performed by the computer are addition, subtraction, multiplication and division. The decimal numbers are first converted to the machine numbers consisting of the only digits 0 and 1 with a base or radix depending on the computer. If the base is two, eight or sixteen, the number system is called binary, octal or hexadecimal respectively. The decimal number system has the base 10. The decimal integer number 4987 actually means (4987)10 = 4

x 103 + 9 X 102 + 8 x 101 + 7 x 10°

(1.1)

which represents a polynomial in the base 10. Similarly a fractional decimal number 0.6251 means (0.6251)10 - 6 x 10-1 + 2 x 10-2 + 5 x 10~3 + 1 x 10~4 (1.2) -1 which is a polynomial in 10 . Combining (1.1) and (1.2), we may write the number 4987.6251 in decimal system as (4987.6251)io

= 4 x 103 + 9 x 102 + 8 x 101 + 7 x 10° + 6X 10'1+2x 10-2+5 x 10-3+l x 10-4

where the subscripts denote the base of the number system.

(1.3)

2

Numerical Methods

Thus a number N — {dn-dn^2 • • • do-d-d_2 . .. d_m) in decimal system can always be expressed in the form Wio = ^-ilO'-1 + dn-20n~2 4 ... 4 ^lO1 + 0, the smaller root is given by b — Vb2 — 4 ac

Here a= 1 = .1000 x 10l b = 400 = .4000 X 103 c = 1

l

= .1000 X 10

b2 - 4ac = .1600 X 106 - .4000 X 101

= .1600 X 106 (to four digit accuracy). Vb2 — 4ac = .4000 X 103 Substituting in the above formula we get x = .0000.

12

Numerical Methods

However, if we rewrite the above formula in the form 2c x= b + V b2 — 4ac we get

.2000 x 101

x=

.4000 x 103 + .4000 x 103

.2000 x 101 .8000 x 103 = .0025 which is the exact root. Example 1.10

Compute the midpoint of the numbers

a = 4.568 and b = 6.762 using the four digit arithmetic. If we take the midpoint as the mean of the numbers, we have c=

a

4568 x 101 -f .6762 x 101

+ b

.1133 x 102

= .5660 x 10l

However, if we use the formula b—a C

—

Q,

—)—

——

we get c = .4568 V 101 i -6762 x 10‘ — .4568 x 101 2

1

= .4568 x 10> + .1097 x 10 = .4665 x 10' which is the correct result. 1.4

MACHINE COMPUTATION

To obtain meaningful results for a given problem using computers, there are five distinct phases: 1. Choice of a method 2. designing the algorithm 3. flow charting 4. programming 5. computer execution A method is defined to be a mathematical formula for finding the solution of a given problem. There may be more than one method available to solve the same problem. We should choose the method which suits the given problem best. The inherent assumptions and limitations of the method must be studied carefully.

High Speed Computation

13

Once the method has been decided, we most describe a complete and unambiguous set of computational steps to be followed in a particular sequence to obtain the solution. This description is called an algorithm. It may be emphasized that the computer is concerned with the algorithm and not with the method. The algorithm tells to the computer where to start, what information to use, what operations to be carried out and in which order, what information to be printed and when to stop. Example 1.11

Design an algorithm to find the real roots of the equation

ax2 + bx + c = 0, a, b, c real for 10 sets of values of a, b, c, using the method Xl

—b + e ~ 2a '

—b — e ~ 2a

(1.26)

where e — /{b2 — 4ac). The following computational steps are involved: Step 1: Set / = 1. Step 2: Read a, b, c. Step 3:

Check: is a = 0? if yes, print wrong data and go to step 9, otherwise go to next step.

Step 4: Step 5:

Calculate d — b2 — 4ac. Check: is d < 0? if yes, print complex roots and go to step 9, otherwise go to next step.

Step 6:

Calculate e = V(b2 — 4ac)

Step 7: Calculate x and x2 using nfethod (1.26) Step 8: Print X and x2Step 9: Increase I by I. Step 10: Check: Is / < 10? if yes, go to step 2, otherwise go to next step. Step 11: Stop. On execution of the above eleven steps or instructions in the same order, the problem is completely solved. These eleven steps constitute the algorithm of the method (1.26). An algorithm has five important features: 1. finiteness : an algorithm must terminate after a finite number of steps. 2. definiteness: each step of an algorithm must be clearly defined or the action to be taken must be unambiguously specified. 3. inputs: an algorithm must specify the quantities which must be read before the algorithm can begin. In the algorithm of example 1.11 the three input quantities are a, b, c. 4. outputs: an algorithm must specily the quantities which are to be outputted and their proper place. In the algorithm of example 1.11 the two output quantities are xu x2-

14 Numerical Methods

5.

effectiveness: an algorithm must be effective which means that all operations are executable. For example, in the algorithm of example 1.11, we must avoid the case a 0. as division by zero is not possible. Similarly, if b2 - 4ac < 0, some alternate path must be defined to avoid finding the square root of a negative number. A flow chart is a graphical representation of a specific sequence of steps (algorithm) to be followed by the computer to produce the solution of a given problem. It makes use of the flow chart symbols to represent the asic operations to be carried out. The various symbols are connected by arrows to indicate the flow of information and processing. While drawing a flow chart any logical error in the formulation of the problem or applying the algorithm can be easily seen and corrected. Some of the symbols used in drawing flow charts are given in Table 1.1. Table 1.1 Flow Chart Symbols

Flow chart Symbols Meaning

A processing symbol such as addition or subtraction of two numbers and movement of data in computer memory.

A decision taking symbol. Depending on the answer yes or no, a particular path is chosen.

Rp o d

A) B

An input symbol, specifying quantities which are to be read before processing can proceed.

An output symbol, specifying quantities which are to be outputted.

A terminating symbol, indicating start or end of the flow chart. This symbol is also used as a connector.

High Speed Computation

15

Example 1.12 Draw a flow chart to find real roots of the equation ax2 4- bx + c — 0, a, b, c real, using the method

N' 1!

Set

Yes

yes

Print wrong data.

Print/ comp/ lex / j~og3/ h

Calculate x l ; *2

, Pri nt

Fig. 1.1

/

Flow chart for finding real roots of the quadratic equation

16

Numerical Methods

Xl

— b + V^2 — 4 ac = 2a — b — Vb2 — 4#c =

2*

for ten sets of values of a, b, c. The flow chart is given in Fig. 1.1. The flow chart can be easily translated into any high level language, for example FORTRAN, ALGOL, BASIC etc. and can be executed on the computer.

1.5

COMPUTER SOFTWARE

The purpose of computer software is to provide a useful computational tool for users. The writing of computer software requires a good understanding of numerical analysis and art of programming. A good computer software must satisfy certain criteria of self-starting, accuracy and reliability, minimum number of levels, good documentation, ease of use and portability.

A computer software should be self-starting as far as possible. A numerical method very often involves some parameters whose values are determined by the properties of the problem to be solved. For example in finding the roots of an equation one or more initial approximations to the root have to be given. The program will be more acceptable, if it can be made automatic in the sense that the program will select the initial approximations itself rather than requiring the user to specify them. Accuracy and reliability are measures of the performance of an algorithm on all similar problems. Once an error criterion is fixed, it should produce solutions of all similar problems to that accuracy. The program should be able to prevent and handle most of the exceptional conditions like division by zero, infinite loops etc. The structure of the program should avoid many levels. For example, many programs, used to find roots of an equation have three levels: Program calls zero-finder (parameters, function) zero-finder calls function function subprogram. More the number of levels in the program, more time is wasted in interlinking and transfer of parameters. Good documentation and easy to use are two very important criteria. The program must have some comment lines or comment paragraphs at various places giving explanation and clarification of the method used and steps involved. A good documentation should clarify, what kind of problems can be solved using this software, what parameters are to be supplied, what accuracy can be achieved, which method has been used and other relevant details.

High Speed Computation

17

The criterion of portability means that the software should be made independent of the computer being used as far as possible. Since most^ machines have different hardware configuration, complete independency of the machine may not be possible. However, the aim at the time of writing a computer software should be that the same program should be able to run on any machine with minimal modifications. Machine dependent constants, for example machine error EPS, must be avoided or automatically generated. A standard dialect of the programming language should be used rather than using a local dialect.

CHAPTER 2

Transcendental and Polynomial Equations 2.1

INTRODUCTION

A problem of great importance in applied mathematics and engineering is that of determining the roots of an equation of the form f(x) = 0

{2.1)

The function/(x) may be given explicitly, for example fipc) = Pn(x) — xn -j- d[Xn 1 -F . . .

ctn-X -j- an

a polynomial of degree n in x, or fix) may be known only implicitly as a transcendental function. Definition 2.1

A number £ is a solution of /(x) = 0 if /(f) = 0. Such a solution f is called a root or a zero of/(x) = 0. Geometrically, a root of the equation (2.1) is the value of x at which the graph ofy = fix) intersects the x-axis. Definition 2.2

If we can write (2.1) as fix) = (x - 0'g(x) = 0 wheie g(x) is bounded and g(f) 0, then f is called a multiple root of multiplicity m. For m — 1, the number f is said to be a simple root. There are generally two types of methods used to find the roots of the equation (2.1). (i) Direct methods. These methods give the exact value of the roots in a finite number of steps. Further, the methods give all the roots at the same time. For example, a direct method gives the root of a linear or first degree equation =

,

0

OQ

0

as x

a{

=

a0

Similarly, the roots of the quadratic equation a0x2

-j-

atx

+

a2 = 0,

a0

0

(2.3)

Transcendental and Polynomial Equations

19

are given by _ — at ±

Via] — 4a„a2) 2a0

(ii) Iterative methods. These methods are based on the idea of successive approximation, i.e. starting with one or more initial approximations to the root, we obtain a sequence of approximants or iterates {.xk}, which in the limit converges to the root. The methods may give only one root at a time. For example, to solve the quadratic equation (2.3) we may choose any one of the following iteration methods: (a)

xk+i

0-2

—

T' a0xk Oy

?

a

2

(b) (c)

k+l

OoXk

-f- ad

a2 + a{xk

x

k+l

OoXk

5

k — 0, 1, 2,... k = 0, 1, 2,. . . k = 0, 1,2,...

(2.4)

The convergence of the sequence {xk} to a number |, the root of the equation (2.3) depends on the rearrangement (2.4) and the choice of starting approximation x0. Definition 2.3

A sequence of iterates {*&} is said to converge to the root |,

if lim |Xu — || = 0

k—> co

If xk, x/c-u . .. , Xk-m+i are m approximations to the root, then a multipoint iteration method is defined as Xk+1

Xk—lf • • • J %k—m+i)

(2.5)

The function is called the multipoint iteration function. For m — 1, we get the one point iteration method Xk+1

=

;

(^0

»•> be deler-

a

o) — log g

log 2

The minimum number of iterations required for converging to a root in the interval (0, 1) for a given e are listed in Table 2.2.

Table 2.2 10-2

e n

7 i

Number of Iterations

io-3

10~4

io-5

io-*

10~7

JO

14

17

20

24

S> ^se^on method requiresfora the large of iterations to achieve a reasonable degree of accuracy root.number It requires one function evaluation for each iteration.

Transcendental and Polynomial Equations

23

Example 2.2 Perform five iterations of the bisection method to obtain the smallest positive root of the equation f(x) = x3 — 5x l — 0 Since /(0) > 0 and /(l) < 0, the smallest positive root lies in the interval (0, 1). Taking a0 = 0, b0 = 1, we get m

i

—

i(ao +

b0) =

f(mi)= —1.375

_+ 1) = 0-5

and f(cio)f(ml)

This is called the secant or the chord method. Geometrically, in this method we replace the function/(*) by a straight line or a chord passing through the points (**,/*) and (**_,/*_,) and take the point of intersection of the straight line with the x-axis as the next approximation to the root. If the approximations are such that fkfk-i < 0, then the method (2.11) or (2.12) is known as the Regula-FaJsi method. The method is shown graphically in Figure 2.2. Since (xk-U fk-I), (xk, fk) are known before the start of the iteration, the secant method requires one function evaluation per step.

Example 2.3 Use the secant and regula-falsi methods to determine the root of the equation cos x — xex — 0 Taking the initial approximations as x0 = 0, xt = l, we have for the secant method /(0) = 1,/(1) = cos 1 - e = -2G77979523

Transcendental and Polynomial Equations

x2 =

*1

-

7

25

*°-/i = 0.3146653378

J l — /o

/(.3146653378) = 0.519871175 x3 =

X

X2-

* — X}f2 = 0.4467281466

J2 ~ J1

The computed results are tabulated in Table 2.4. Table 2.4

Approximations to the Root by the Secant and the Regula-Faisi Methods Secant Method

k

Xk+l

f(Xk+1)

Regula-Faisi Method /(**+0

1

0.3146653378

0.519871

0.3146653378

0.519871

2

0.4467281466

0.203545

0.4467281446

0.203545

3

0.5317058606

—0.429311(—01)

0.4940153366

0.708023(—01)

4

0.5169044676

0.259276(—02)

0.5099461404

0.236077(—01)

5

0.5177474653.

0.301119(—04)

0.5152010099

0.776011 (-02)

6

0.5177573708

— 0.215132(—07)

0.5169222100

0.253886(—02)

7

0.5177573637

0.178663(—12)

0.5174846768

0.829358(—03)

8

0.5177573637

0.222045(—15)

0.5176683450

0.270786(—03)

10

—

■—

0.5177478783

0.288554(—04)

20

—

—

0.5177573636

0.396288(—09)

The numbers within the parenthesis denote exponentiation. 2.3.2

The Newton-Raphson Method

We determine a0 and a in (2.8) using the conditions fk —

a

0

x

k + ^1 (2.13)

fk = a0

where a prime denotes differentiation with respect to x. On substituting a0 and a{ from (2.13) in (2.9) and representing the approximate value of x by xk+i, we obtain x

k+1

x Xk

_ A. f

(2.14)

This method is called the Newton-Raphson method. The method (2.14) may also be obtained directly from (2.12) by taking the limit xk_t -> xk. In the limit when xk-i -> xk, the chord passing through the points (xk, fk) and {xk-U fk~) becomes the tangent at the point (xk,fk). Thus, in this case the problem of finding the root of the equation (2.1) is equivalent to finding the point of intersection of the tangent to the curve, y = f(x) at the point (xk,fk)

26

Numerical Methods

with the A-axis. The method is shown graphically in Figure 2.3. The Newton-Raphson method requires two evaluations fk->fk for each iteration.

Example 2.4 equation

Apply Newton-Raphson’s method to determine a root of the

f{x) — cos x — xex = 0 such that |/0*)| < 10~8, where x* is the approximation to the root. We write (2.14) in the form Xk+i = *k — where Axk =

k = 0, 1, 2, ...

Introduction.: One-dimensional motion under a harmonic force (appeared in the book). Basic Numerical Methods.: Lagrange interpolation with the Aitken method (appeared in the book). The FORTRAN programs for some Numerical Method in. = 3 x + sin x − e x using Secant method in the. Documents Similar To Fortran Numerical Analysis Programs.

Click on the program name to display the source code, which can be downloaded. '| Chapter 1: Mathematical Preliminaries and Floating-Point Representation | ||

| first.f | First programming experiment | |

| pi.f | Simple code to illustrate double precision | |

| xsinx.f | Example of programming f(x) = x - sinx carefully | |

| Chapter 2: Linear Systems | ||

| ngauss.f | Naive Gaussian elimination to solve linear systems | |

| gauss.f | Gaussian elimination with scaled partial pivoting | |

| tri_penta.f | Solves tridiagonal systems | |

| Chapter 3: Locating Roots of Equations | ||

| bisect1.f | Bisection method (versin 1) | |

| bisect2.f | Bisection method (version 2) | |

| newton.f | Sample Newton method | |

| ` secant.f | Secant method | |

| Chapter 4: Interpolation and Numerical Differentiation | ||

| coef.f | Newton interpolation polynomial at equidistant pts | |

| deriv.f | Derivative by center differences/Richardson extrapolation | |

| Chapter 5: Numerical Integration | ||

| sums.f | Upper/lower sums experiment for an integral | |

| trapezoid.f | Trapezoid rule experiment for an integral | |

| romberg.f | Romberg arrays for three separate functions | |

| rec_simpson.f | Adaptive scheme for Simpson's rule | |

| Chapter 6: Spline Functions | ||

| spline1.f | Interpolates table using a first-degree spline function | |

| spline3.f | Natural cubic spline function at equidistant points | |

| bspline2.f | Interpolates table using a quadratic B-spline function | |

| schoenberg.f | Interpolates table using Schoenberg's process | |

| Chapter 7: Initial Values Problems | ||

| euler.f | Euler's method for solving an ODE | |

| taylor.f | Taylor series method (order 4) for solving an ODE | |

| rk4.f | Runge-Kutta method (order 4) for solving an IVP | |

| rk45.f | Runge-Kutta-Fehlberg method for solving an IVP | |

| rk45ad.f | Adaptive Runge-Kutta-Fehlberg method | |

| taylorsys.f | Taylor series method (order 4) for systems of ODEs | |

| rk4sys.f | Runge-Kutta method (order 4) for systems of ODEs | |

| amrk.f | Adams-Moulton method for systems of ODEs | |

| amrkad.f | Adaptive Adams-Moulton method for systems of ODEs | |

| Chapter 8: More on Systems of Linear Equations | ||

| Chapter 9: Least Squares Methods | ||

| Chapter 10: Monte Carlo Methods and Simulation | ||

| test_random.f | Example to compute, store, and print random numbers | |

| coarse_check.f | Coarse check on the random-number generator | |

| double_integral.f | Volume of a complicated 3D region by Monte Carlo | |

| volume_region.f | Numerical value of integral over a 2D disk by Monte Carlo | |

| cone.f | Ice cream cone example | |

| loaded_die.f | Loaded die problem simulation | |

| birthday.f | Birthday problem simulation | |

| needle.f | Buffon's needle problem simulation | |

| two_die.f | Two dice problem simulation | |

| shielding.f | Neutron shielding problem simulation | |

| Chapter 11: Boundary Value Problems | ||

| bvp1.f | Boundary value problem solved by discretization technique | |

| bvp2.f | Boundary value problem solved by shooting method | |

| Chapter 13: Partial Differential Equations | ||

| parabolic1.f | Parabolic partial differential equation problem | |

| parabolic2.f | Parabolic PDE problem solved by Crank-Nicolson method | |

| hyperbolic.f | Hyperbolic PDE problem solved by discretization | |

| seidel.f | Elliptic PDE solved by discretization/ Gauss-Seidel method | |

| Chapter 13: Minimization of Functions | ||

| Chapter 14: Linear Programming Problems | ||

Addditional programs can be found at the textbook's anonymous ftp site:

| [Home] | [Features] | [TOC] | [Purchase] | [ Codes] | [Web] | [Manuals] | [Errata] | [Links] |

Secant Method Calculator

| Last updated: |